Data Science

Data Science: The Ultimate Guide to Understanding and Applying Data

In an era where data is the new gold, understanding how to harness its power is crucial. Imagine predicting customer behavior, optimizing operations, or even revolutionizing healthcare all through the insights garnered from data. Welcome to the world of data science, where numbers tell compelling stories and drive transformative decisions. Whether you’re a seasoned professional or just starting, this guide will illuminate the path to mastering data science and leveraging its vast potential.

Introduction

Data science stands at the intersection of statistics, computer science, and domain expertise, emerging as a pivotal force in today’s digital landscape. As organizations grapple with massive volumes of data, the demand for skilled data scientists who can extract meaningful insights continues to surge. This guide delves into the multifaceted realm of data science, exploring its core concepts, essential tools, diverse applications, and the pathways to building a successful career. By understanding the intricacies of data science, you can unlock opportunities to drive innovation and gain a competitive edge in various industries.

What is Data Science?

Data science is an interdisciplinary field that combines scientific methods, processes, algorithms, and systems to extract knowledge and insights from both structured and unstructured data. Unlike traditional data analysis, data science encompasses a broader spectrum, integrating advanced techniques from machine learning, artificial intelligence, and big data technologies. Here’s a deeper look into what defines data science:

- Interdisciplinary Approach: Data science draws from various fields such as statistics, computer science, and domain-specific knowledge to analyze data comprehensively.

- Data Processing: It involves collecting, cleaning, and transforming raw data into a usable format, ensuring accuracy and reliability.

- Advanced Analytics: Utilizing machine learning algorithms and statistical models to identify patterns, trends, and correlations within datasets.

- Visualization: Presenting data insights through visual tools like charts, graphs, and dashboards to facilitate informed decision-making.

- Predictive Modeling: Building models that can forecast future trends based on historical data, aiding in strategic planning and risk management.

Data science is not just about crunching numbers; it’s about storytelling with data. By transforming complex datasets into understandable narratives, data scientists empower organizations to make data-driven decisions that drive growth and innovation.

Why Data Science is Important in Today’s World

In today’s data-driven landscape, the importance of data science cannot be overstated. With the exponential growth of data generated every second, organizations across various sectors leverage data science to stay competitive and innovative. Here’s why data science is indispensable:

- Informed Decision-Making: Data science enables businesses to make decisions based on empirical evidence rather than intuition, reducing risks and increasing efficiency.

- Customer Insights: By analyzing customer data, companies can understand behaviors, preferences, and trends, allowing for personalized marketing and improved customer experiences.

- Operational Efficiency: Data science helps identify bottlenecks and optimize processes, leading to cost savings and enhanced productivity.

- Innovation and Product Development: Insights from data can drive the development of new products and services tailored to market needs.

- Competitive Advantage: Organizations that effectively utilize data science gain a strategic edge by anticipating market shifts and responding swiftly to changes.

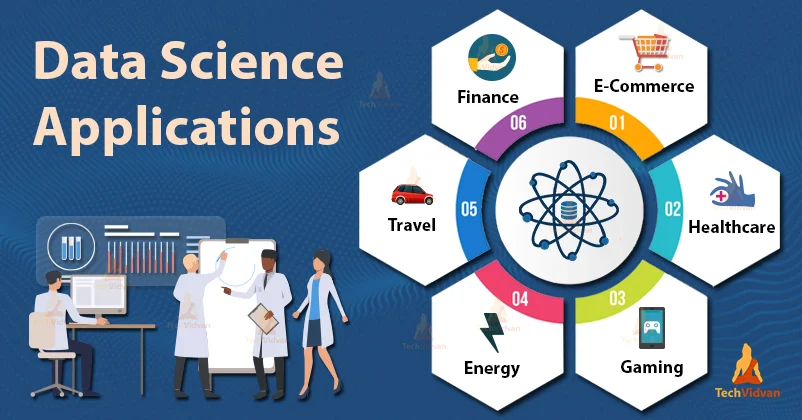

In sectors like healthcare, finance, e-commerce, and marketing, data science plays a pivotal role. For instance, in healthcare, predictive analytics can forecast disease outbreaks, while in finance, fraud detection systems safeguard against unauthorized transactions. The rise of artificial intelligence and machine learning further amplifies the capabilities of data science, making it a cornerstone of modern innovation and strategic planning.

Key Components of Data Science

Data science is a complex field composed of several key components that work in harmony to extract valuable insights from data. Understanding these components is essential for anyone looking to delve into data science:

- Data Collection

- Systematically gathering data from various sources, including databases, APIs, web scraping, surveys, and sensors.

- Ensuring data is relevant, comprehensive, and representative of the problem domain.

- Data Cleaning

- Preparing raw data for analysis by handling missing values, removing duplicates, and correcting inconsistencies.

- Employing techniques such as normalization, transformation, and encoding to standardize data formats.

- Data Analysis

- Applying statistical methods and computational techniques to explore and understand data.

- Identifying patterns, correlations, and anomalies that can inform further analysis or decision-making.

- Data Visualization

- Creating graphical representations of data insights using tools like Tableau, Power BI, Matplotlib, and Seaborn.

- Facilitating the communication of findings to stakeholders through clear and impactful visuals.

- Machine Learning and Modeling

- Building predictive models using algorithms such as linear regression, decision trees, and neural networks.

- Evaluating model performance and iterating to improve accuracy and reliability.

Each component is interdependent, forming a cohesive workflow that transforms raw data into actionable intelligence. Mastery of these components equips data scientists to tackle complex problems, drive innovation, and contribute significantly to their organizations.

Core Concepts of Data Science

At the heart of data science lie fundamental concepts that form the foundation for all data-driven projects. Grasping these core ideas is crucial for anyone aspiring to excel in the field.

Data Collection and Cleaning

Effective data science projects begin with robust data collection and meticulous data cleaning. These foundational steps ensure that the data used for analysis is accurate, reliable, and relevant.

Data Collection involves systematically gathering data from various sources to build a comprehensive dataset. This process can include:

- Web Scraping: Extracting data from websites using tools like Beautiful Soup or Scrapy.

- Data Warehousing: Storing large volumes of data in centralized repositories for easy access and management.

- APIs: Using Application Programming Interfaces to retrieve data from online services and platforms.

- Surveys and Sensors: Collecting data directly from users or through IoT devices.

Effective data collection methods ensure that the dataset is representative and suitable for analysis, forming the basis for subsequent stages in the data science workflow.

Data Cleaning, also known as data preprocessing, is the critical process that prepares raw data for analysis. Key activities include:

- Handling Missing Values: Imputing or removing incomplete data entries to prevent skewed analysis.

- Removing Duplicates: Ensuring data uniqueness to maintain data integrity.

- Correcting Inconsistencies: Standardizing data formats and resolving discrepancies to ensure uniformity.

- Outlier Detection: Identifying and managing outliers that may distort data analysis.

Mastering data collection and cleaning is essential for transforming raw data into a structured format that allows for meaningful analysis and modeling. This meticulous preparation lays the groundwork for uncovering valuable insights and driving informed decision-making.

Exploratory Data Analysis (EDA)

Exploratory Data Analysis (EDA) is a pivotal step in the data science process, acting as the detective work that uncovers the underlying structure and patterns within a dataset. EDA involves a combination of statistical techniques and visualizations to summarize and interpret data effectively.

Key Objectives of EDA:

- Understand Data Distributions: Assessing how data points are spread across different values using histograms, box plots, and density plots.

- Identify Missing Values: Detecting gaps in the dataset that may require imputation or exclusion.

- Assess Outliers: Spotting unusual data points that deviate significantly from the rest of the dataset.

- Analyze Relationships: Exploring correlations and interactions between different variables through scatter plots, heatmaps, and pair plots.

Techniques and Tools for EDA:

- Descriptive Statistics: Calculating mean, median, mode, variance, and standard deviation to summarize data properties.

- Visualization Tools: Utilizing libraries like Matplotlib, Seaborn, and Plotly to create insightful visuals.

- Correlation Analysis: Measuring the strength and direction of relationships between variables using Pearson or Spearman coefficients.

EDA is crucial for informing the subsequent stages of data science projects, particularly model selection and feature engineering. By thoroughly examining the dataset, data scientists can make informed decisions that enhance the accuracy and effectiveness of predictive models. Additionally, EDA helps in uncovering insights that may lead to new hypotheses and strategies, driving innovation within the organization.

Machine Learning and Predictive Analytics

Machine Learning (ML) and Predictive Analytics are at the forefront of data science, enabling the creation of models that can forecast future outcomes based on historical data. These techniques transform raw data into actionable predictions, facilitating proactive decision-making and strategic planning.

Machine Learning Overview:

- Supervised Learning: Involves training models on labeled data to predict outcomes. Common algorithms include:

- Linear Regression: Predicts a continuous target variable based on input features.

- Decision Trees: Splits data into branches to make classification or regression decisions.

- Support Vector Machines (SVM): Finds the optimal boundary separating different classes.

- Unsupervised Learning: Focuses on finding hidden patterns in unlabeled data. Key algorithms include:

- Clustering (e.g., K-Means): Groups data points into clusters based on similarity.

- Principal Component Analysis (PCA): Reduces dimensionality while preserving data variance.

- Reinforcement Learning: Involves training agents to make sequences of decisions by rewarding desired behaviors.

Predictive Analytics Applications:

- Forecasting: Predicting future sales, demand, or market trends using time series analysis.

- Risk Assessment: Evaluating the likelihood of events such as loan defaults or equipment failures.

- Customer Churn Prediction: Identifying customers who are likely to discontinue services.

Machine Learning empowers organizations to automate decision-making processes and enhance operational efficiencies. For example, in finance, ML algorithms detect fraudulent transactions in real-time, safeguarding assets and maintaining trust. In healthcare, predictive models can forecast patient readmission rates, enabling proactive interventions that improve patient outcomes and reduce costs. As technology advances, the integration of ML and predictive analytics continues to drive innovation, making data science an indispensable tool for modern enterprises.

Data Visualization and Reporting

Data visualization and reporting are essential components of data science, transforming complex data into intuitive and actionable insights. Effective visualization facilitates the communication of findings to stakeholders, enabling informed decision-making across all levels of an organization.

Core Concepts of Data Visualization:

- Clarity: Ensuring that visuals are easy to understand and free from unnecessary complexity.

- Accuracy: Representing data truthfully without distorting the underlying information.

- Accessibility: Making visuals accessible to a diverse audience, including non-technical stakeholders.

Key Visualization Tools:

- Tableau: A powerful tool for creating interactive and shareable dashboards.

- Microsoft Power BI: Integrates with various data sources to deliver real-time insights and visualizations.

- Matplotlib and Seaborn: Python libraries for creating a wide range of static, animated, and interactive plots.

- D3.js: A JavaScript library for producing dynamic, interactive data visualizations on the web.

Effective Reporting Practices:

- Dashboards: Consolidating multiple visualizations into a single interface for comprehensive monitoring.

- Storytelling: Crafting a narrative around data insights to engage and inform the audience.

- Customization: Tailoring visualizations to the specific needs and preferences of stakeholders.

Data visualization bridges the gap between data analysis and actionable insights. By presenting data in a visually appealing and comprehensible manner, data scientists enable stakeholders to grasp complex information quickly and make strategic decisions with confidence. Whether it’s a sales dashboard, a financial report, or a healthcare analytics presentation, effective visualization is key to unlocking the full potential of data science.

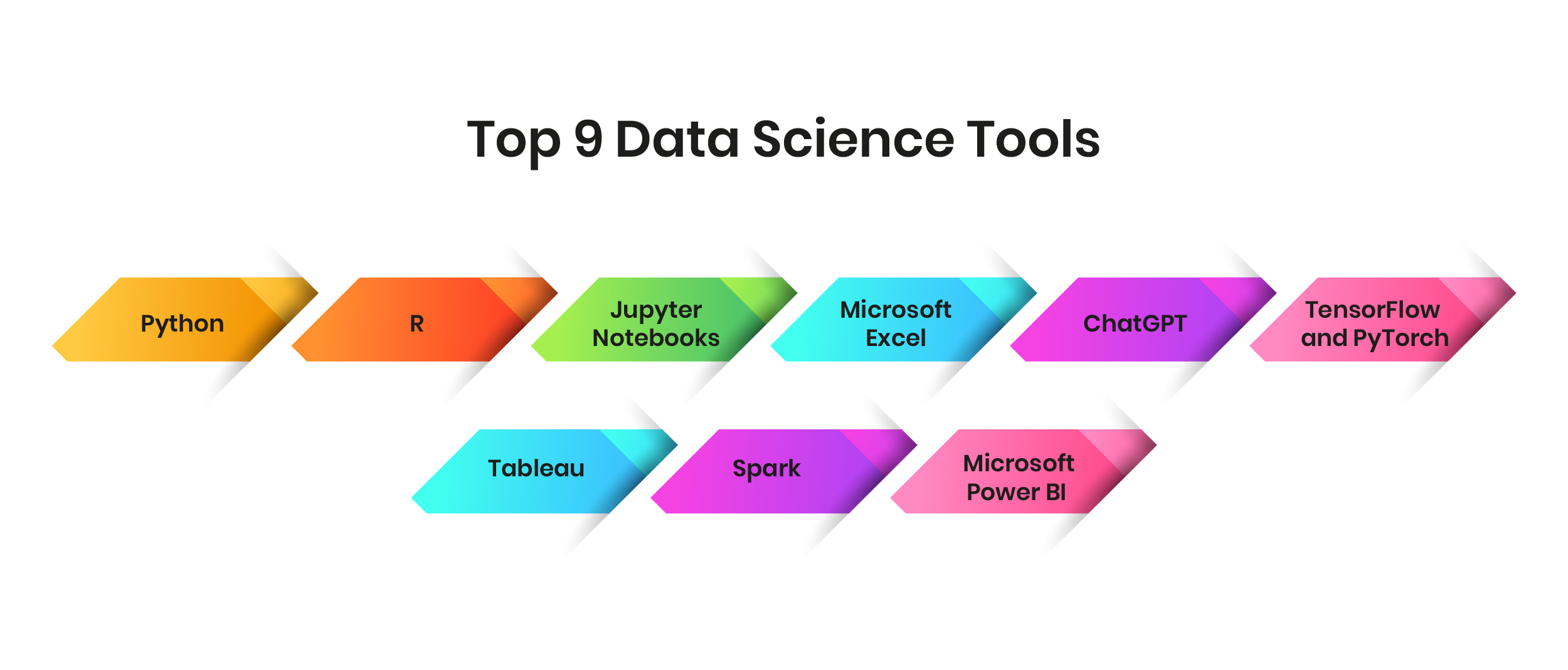

Essential Tools and Technologies in Data Science

Understanding the core concepts of data science is just the beginning. Equipping yourself with the right tools and technologies is crucial for executing data-driven projects effectively. These tools span various aspects of data science, including data manipulation, machine learning, visualization, and more.

Programming Languages for Data Science

Programming is the backbone of data science, enabling data manipulation, analysis, and model building. Several programming languages are indispensable in the data scientist’s toolkit, each offering unique strengths tailored to specific tasks.

1. Python for Data Science

Python is the dominant language in data science, renowned for its simplicity and extensive ecosystem of libraries. Its versatility makes it suitable for a wide range of applications, from data cleaning to machine learning.

- Pandas: For data manipulation and analysis, providing data structures like DataFrames for handling structured data.

- NumPy: Facilitates numerical computations and handling of multi-dimensional arrays.

- Scikit-learn: A robust library for implementing machine learning algorithms.

- TensorFlow and PyTorch: Essential for deep learning applications, enabling the creation of complex neural networks.

Personal Insight: Python’s readability and community support make it an ideal choice for both beginners and experienced data scientists. Its integration with other tools and platforms enhances its utility in diverse projects.

2. R for Data Science

R excels in statistical analysis and data visualization, offering a plethora of packages that cater to complex data needs.

- ggplot2: A powerful tool for creating advanced and customizable visualizations.

- dplyr: Simplifies data manipulation tasks, making it easy to filter, select, and transform data.

- Shiny: Enables the development of interactive web applications directly from R, facilitating dynamic data presentations.

Comparative Advantage: While Python is favored for its general-purpose capabilities, R is preferred in academic and research settings due to its specialized statistical packages and visualization prowess.

3. SQL (Structured Query Language)

SQL is indispensable for managing and querying relational databases. It allows data scientists to efficiently retrieve, update, and manipulate data stored in structured formats.

- Joins and Subqueries: Essential for combining data from multiple tables.

- Aggregation Functions: Useful for summarizing data through operations like COUNT, SUM, and AVG.

- Indexing: Enhances query performance, especially when dealing with large datasets.

Practical Application: Proficiency in SQL is critical for accessing and preparing data, making it a fundamental skill for data scientists working with databases.

4. Java and Scala

Java is valuable for enterprise-level data science projects, particularly in big data processing. Scala, often used with Apache Spark, combines functional programming paradigms with seamless integration into the Java ecosystem.

- Apache Spark: A powerful framework for distributed data processing, enabling the handling of massive datasets with ease.

- Hadoop: Facilitates scalable storage and processing of big data across distributed systems.

Industry Insight: Organizations dealing with large-scale data and requiring high-performance processing often prefer Java and Scala for their robustness and scalability.

5. Julia

Julia is gaining popularity for high-performance numerical analysis and computational science. Its execution speed rivals that of C, making it ideal for intensive data science tasks.

- Machine Learning Libraries: Growing support for machine learning algorithms enhances its applicability.

- Parallel Computing: Facilitates efficient processing of large datasets through parallelism.

Emerging Trend: As data science becomes more computation-intensive, Julia’s performance capabilities position it as a promising language for future applications.

Proficiency in these programming languages enables data scientists to tackle a wide array of challenges, from data manipulation and statistical analysis to building sophisticated machine learning models. Choosing the right language often depends on the specific requirements of the project and the existing technology stack within an organization.

Python for Data Science

Python’s dominance in data science is attributable to its simplicity, readability, and the extensive ecosystem of libraries that streamline various data tasks.

Key Libraries and Their Uses:

- Pandas: Offers data structures and functions needed for analyzing structured data. It provides tools for data cleaning, transformation, and manipulation.

- NumPy: Enables efficient numerical computations and handling of large multi-dimensional arrays and matrices.

- Scikit-learn: Provides a range of supervised and unsupervised learning algorithms, making it easy to implement machine learning models.

- TensorFlow and PyTorch: Power deep learning applications, allowing for the development of neural networks and complex machine learning models.

Personal Insight: Python’s versatility makes it suitable for end-to-end data science workflows, from data ingestion and cleaning to modeling and deployment. Its strong community support ensures continuous improvement and a wealth of resources for learning and troubleshooting.

Advantages of Python in Data Science:

- Ease of Learning: Python’s straightforward syntax reduces the learning curve, enabling beginners to quickly get started.

- Extensive Libraries: A rich set of libraries caters to almost every aspect of data science, enhancing productivity and efficiency.

- Integration Capabilities: Python integrates seamlessly with other languages and tools, facilitating the development of comprehensive data solutions.

Example Use Case: Fraud Detection in Finance

Using Python, data scientists can develop machine learning models to detect fraudulent transactions by analyzing transaction patterns and identifying anomalies. Libraries like Scikit-learn can be employed to build classification models, while Pandas facilitates data preprocessing and manipulation.

Python’s flexibility and powerful libraries make it an indispensable tool for data scientists aiming to derive meaningful insights and build robust predictive models.

R for Data Science

R is a specialized language designed for statistical analysis and data visualization, making it a favorite among statisticians and data analysts.

Key Libraries and Their Uses:

- ggplot2: Facilitates the creation of complex and aesthetically pleasing visualizations with a grammar of graphics approach.

- dplyr: Simplifies data manipulation through a set of intuitive functions for filtering, selecting, and transforming data.

- Shiny: Enables the development of interactive web applications directly from R, allowing for dynamic data exploration and visualization.

Comparative Insight: While Python is celebrated for its general-purpose capabilities, R shines in statistical modeling and advanced visualizations. Its comprehensive suite of packages caters specifically to data exploration and presentation needs.

Advantages of R in Data Science:

- Statistical Expertise: R’s extensive statistical packages make it ideal for in-depth data analysis and hypothesis testing.

- Advanced Visualization: Tools like ggplot2 allow for the creation of sophisticated and customizable visualizations that enhance data storytelling.

- Community and Support: A strong community of statisticians and data scientists ensures the continuous development of new packages and resources.

Example Use Case: Clinical Trial Analysis in Healthcare

In healthcare, R can be used to analyze clinical trial data, identifying significant trends and outcomes. Using dplyr for data manipulation and ggplot2 for visualization, data scientists can present findings in a clear and impactful manner, aiding in the development of effective treatment plans.

R’s strength in statistical analysis and visualization makes it a powerful tool for data scientists focused on deriving deep insights from complex datasets.

Data Science Libraries and Frameworks

Libraries and frameworks are the building blocks of data science, providing the tools necessary to manipulate data, build models, and create visualizations efficiently. Mastery of these libraries enhances a data scientist’s ability to execute complex tasks seamlessly.

1. Pandas and NumPy for Data Manipulation

- Pandas:

- DataFrames: Facilitates the handling of structured data, allowing for easy data manipulation and analysis.

- Functions: Provides functions for data cleaning, merging, reshaping, and aggregation.

- Performance: Optimized for performance with large datasets, enabling efficient data processing.

- NumPy:

- Arrays: Offers powerful n-dimensional array objects for handling numerical data.

- Mathematical Functions: Includes a vast array of mathematical functions for numerical computations.

- Integration: Integrates seamlessly with other Python libraries, enhancing overall functionality.

Personal Insight: Combining Pandas and NumPy allows data scientists to perform complex data manipulations with ease, laying the groundwork for advanced analysis and modeling.

2. Scikit-learn and TensorFlow for Machine Learning

- Scikit-learn:

- Algorithms: Implements a wide range of machine learning algorithms for classification, regression, clustering, and more.

- Utilities: Provides tools for model evaluation, selection, and validation.

- Ease of Use: Designed with a user-friendly API, making it accessible for both beginners and experts.

- TensorFlow:

- Deep Learning: Supports the development of neural networks and deep learning models for complex tasks.

- Scalability: Optimized for performance and scalability, suitable for large-scale machine learning applications.

- Flexibility: Offers flexibility in designing and deploying custom machine learning models.

3. Matplotlib and Seaborn for Data Visualization

- Matplotlib:

- Customization: Highly customizable, allowing for the creation of a wide variety of static, animated, and interactive plots.

- Integration: Works well with other Python libraries, enhancing visualization capabilities.

- Flexibility: Suitable for creating detailed and intricate visualizations tailored to specific needs.

- Seaborn:

- Statistical Graphics: Specialized in creating attractive and informative statistical graphics.

- Simplified Syntax: Simplifies the creation of complex visualizations with a high-level interface.

- Integration: Built on top of Matplotlib, providing enhanced visualization options and aesthetics.

| Library/Framework | Purpose | Key Features |

|---|---|---|

| Pandas | Data manipulation and analysis | DataFrames, merging, reshaping |

| NumPy | Numerical computing | N-dimensional arrays, mathematical functions |

| Scikit-learn | Machine learning | Classification, regression, clustering |

| TensorFlow | Deep learning | Neural networks, scalability |

| Matplotlib | Data visualization | Highly customizable plots |

| Seaborn | Statistical data visualization | Attractive statistical graphics |

These libraries and frameworks empower data scientists to perform a wide range of tasks efficiently, from cleaning and manipulating data to building sophisticated machine learning models and creating compelling visualizations. Mastery of these tools is essential for executing data-driven projects that deliver impactful insights and drive strategic decisions.

Pandas and NumPy for Data Manipulation

Pandas and NumPy are foundational libraries in Python, indispensable for data manipulation and numerical analysis.

Pandas Features:

- Data Structures: Provides DataFrame and Series objects for handling tabular data.

- Data Cleaning: Tools for handling missing data, duplicates, and data transformation.

- Grouping and Aggregation: Facilitates grouping data and performing aggregate operations.

- Merging and Joining: Enables combining data from different sources seamlessly.

NumPy Features:

- Array Operations: Efficient handling of large multi-dimensional arrays and matrices.

- Mathematical Functions: Comprehensive set of mathematical functions for numerical computations.

- Broadcasting: Facilitates operations on arrays of different shapes and sizes.

- Integration: Seamlessly integrates with other scientific computing libraries, enhancing overall functionality.

Personal Insight: Together, Pandas and NumPy provide a powerful toolkit for data scientists, enabling efficient data manipulation and preparation for analysis and modeling.

Example Use Case: Data Transformation in E-commerce

In an e-commerce platform, Pandas can be used to transform raw transaction data into meaningful insights by aggregating sales by region, identifying top-selling products, and analyzing customer purchase patterns. NumPy’s numerical operations enhance the efficiency of these transformations, enabling rapid data processing.

Mastering Pandas and NumPy is essential for any data scientist aiming to perform effective data manipulation and preparatory tasks, setting the stage for advanced analysis and model building.

Scikit-learn and TensorFlow for Machine Learning

Machine Learning is a cornerstone of data science, and libraries like Scikit-learn and TensorFlow provide the tools necessary to build and deploy advanced models.

Scikit-learn Features:

- Supervised Learning Algorithms: Includes algorithms like linear regression, decision trees, and support vector machines.

- Unsupervised Learning Algorithms: Offers clustering algorithms like K-Means and dimensionality reduction techniques like PCA.

- Model Evaluation: Tools for cross-validation, grid search, and performance metrics to evaluate model effectiveness.

- Preprocessing: Functions for data scaling, encoding, and feature selection.

TensorFlow Features:

- Deep Learning Models: Supports the development of complex neural networks, including CNNs, RNNs, and GANs.

- Scalability: Optimized for training large-scale models on distributed systems and GPUs.

- Flexibility: Allows for custom model architectures and integration with other TensorFlow tools like TensorBoard for visualization.

- Deployment: Facilitates deploying models to production environments through TensorFlow Serving and TensorFlow Lite.

Comparative Insight: While Scikit-learn is ideal for traditional machine learning tasks, TensorFlow excels in deep learning applications, making them complementary tools in a data scientist’s arsenal.

Example Use Case: Predictive Maintenance in Manufacturing

In manufacturing, Scikit-learn can be used to develop models that predict equipment failures based on historical sensor data. TensorFlow can further enhance these models by incorporating deep learning techniques to analyze complex patterns and improve prediction accuracy.

By leveraging the capabilities of Scikit-learn and TensorFlow, data scientists can build robust machine learning models that drive innovation and operational efficiency across various industries.

Matplotlib and Seaborn for Data Visualization

Data visualization is a powerful tool in data science, enabling the clear and effective presentation of data insights. Libraries like Matplotlib and Seaborn are essential for creating a wide range of visualizations.

Matplotlib Features:

- Versatility: Supports numerous plot types, including line plots, bar charts, scatter plots, and histograms.

- Customization: Offers extensive customization options for plot aesthetics, including colors, labels, and annotations.

- Interactivity: Facilitates the creation of interactive plots with tools like widgets and event handling.

- Integration: Works seamlessly with other libraries like Pandas and NumPy for enhanced functionality.

Seaborn Features:

- Statistical Plots: Specializes in creating beautiful and informative statistical graphics, such as violin plots, swarm plots, and pair plots.

- Simplified Syntax: Provides a high-level interface for creating complex visualizations with minimal code.

- Theming: Offers built-in themes and color palettes that enhance the appearance of plots.

- Advanced Features: Supports features like faceting and multi-plot grids for comparative analysis.

Personal Insight: Combining Matplotlib’s flexibility with Seaborn’s statistical power allows data scientists to create insightful and visually appealing plots that convey complex data relationships effectively.

Example Use Case: Sales Trend Analysis in Retail

In retail, Matplotlib can be used to plot sales trends over time, while Seaborn can visualize the distribution of sales across different regions and product categories. These visualizations help stakeholders understand performance patterns and make informed strategic decisions.

Mastery of Matplotlib and Seaborn is essential for data scientists aiming to present data insights in a clear, compelling, and actionable manner. Effective visualization enhances the storytelling aspect of data science, making it easier for stakeholders to grasp complex information.

Applications of Data Science

Understanding the essential tools and technologies in data science paves the way for exploring its diverse applications across various industries. Data science is not confined to a single sector; its versatility enables transformative impacts in multiple domains.

Data Science in Business and Marketing

Data science revolutionizes the way businesses operate and engage with their customers. By leveraging data, organizations can optimize marketing strategies, enhance customer experiences, and drive overall business growth.

Enhanced Customer Insights

- Behavioral Analysis: Understanding customer behaviors and preferences through data analysis.

- Segmentation: Dividing customers into distinct groups based on demographics, purchasing patterns, and engagement levels.

- Personalization: Tailoring marketing campaigns and product recommendations to individual customer needs.

Predictive Analytics

- Forecasting Trends: Anticipating market trends and consumer demand to inform strategic planning.

- Sales Optimization: Predicting future sales to manage inventory and allocate resources effectively.

- Risk Management: Identifying potential risks and mitigating them through proactive measures.

Real-Time Data Processing

- Dynamic Decision-Making: Utilizing real-time data streams to make quick, informed decisions.

- Customer Engagement: Responding to customer interactions promptly to enhance satisfaction and loyalty.

- Competitive Advantage: Staying ahead of competitors by leveraging up-to-the-minute data insights.

Automated Insights

- AI-Powered Tools: Using artificial intelligence to automate the extraction of valuable insights from data.

- Accessibility: Democratizing data access, allowing non-technical personnel to leverage data for decision-making.

- Efficiency: Reducing the time and effort required to generate insights, enabling faster response to market changes.

Personal Insight: Data science empowers businesses to move from reactive to proactive strategies, fostering a data-driven culture that enhances operational efficiencies and drives innovation.

Behavioral Analytics and Sentiment Analysis

- Customer Sentiment: Analyzing customer reviews and social media interactions to gauge sentiment and adjust strategies accordingly.

- Emotion Detection: Understanding the emotional drivers behind customer behavior to create more compelling marketing messages.

- Engagement Metrics: Measuring and optimizing customer engagement through data-driven insights.

A/B Testing in Marketing Campaigns

- Experimentation: Conducting A/B tests to compare different marketing strategies and identify the most effective approaches.

- Result Analysis: Analyzing the outcomes of A/B tests to refine marketing tactics and maximize ROI.

- Continuous Improvement: Iteratively improving marketing campaigns based on data-driven findings.

Data science in business and marketing not only drives efficiency and profitability but also fosters innovation by enabling the development of data-informed products and services. As businesses continue to generate and collect vast amounts of data, the role of data science in shaping strategic decisions and enhancing customer engagement will only become more prominent.

Data Science in Healthcare and Medicine

Data science is transforming the healthcare and medical industries by enabling more accurate diagnoses, personalized treatments, and improved patient care. The integration of data-driven approaches is revolutionizing traditional healthcare practices, leading to significant advancements.

Predictive Diagnostics

- Early Detection: Using machine learning models to predict the onset of diseases such as diabetes, cancer, and heart disease.

- Risk Stratification: Identifying high-risk patients for targeted interventions and preventive measures.

- Personalized Treatment Plans: Developing customized treatment strategies based on individual patient data and genetic information.

Operational Efficiency

- Resource Allocation: Optimizing the allocation of medical resources, such as hospital beds, staff, and equipment, based on predictive analytics.

- Supply Chain Management: Enhancing the efficiency of medical supply chains through data-driven forecasting and inventory management.

- Workflow Optimization: Streamlining healthcare workflows to reduce wait times and improve patient satisfaction.

Patient Care Enhancement

- Electronic Health Records (EHR): Analyzing EHR data to gain insights into patient histories and treatment outcomes.

- Telemedicine: Utilizing data science to improve telehealth services, enabling remote monitoring and consultations.

- Healthcare Analytics: Leveraging data analytics to monitor patient health metrics and predict potential complications.

Examples of Data Science in Healthcare:

- Predictive Modeling for Heart Disease: Developing models to predict the likelihood of heart disease based on patient data, aiding in early intervention and prevention.

- Genomic Data Analysis: Analyzing genomic data to identify genetic markers associated with specific diseases, facilitating personalized medicine.

- Clinical Trial Optimization: Enhancing the design and execution of clinical trials through data-driven methodologies, improving efficiency and outcomes.

Personal Insight: Data science in healthcare not only improves clinical outcomes but also enhances the overall efficiency and effectiveness of healthcare delivery, making it an invaluable asset in the medical field.

Machine Learning for Medical Imaging

- Image Recognition: Using deep learning algorithms to detect abnormalities in medical images such as X-rays, MRIs, and CT scans.

- Automated Diagnosis: Enhancing diagnostic accuracy by assisting radiologists in identifying conditions like tumors and fractures.

- Workflow Integration: Integrating machine learning models into radiology workflows to expedite the analysis process and reduce diagnostic delays.

Healthcare Forecasting

- Epidemiological Modeling: Predicting the spread of diseases and evaluating the impact of public health interventions using data-driven models.

- Demand Forecasting: Anticipating patient influx and resource needs to ensure preparedness and optimal service delivery.

The application of data science in healthcare is not only enhancing clinical practices but also contributing to the creation of more resilient and responsive healthcare systems. By fostering a data-driven approach, the healthcare industry can achieve unprecedented levels of efficiency, accuracy, and patient satisfaction.

Data Science in Finance and Banking

Data science is a game-changer in the finance and banking sectors, driving innovations that enhance operational efficiency, risk management, and customer service. The application of advanced data techniques is revolutionizing traditional financial practices, offering new opportunities for growth and security.

Risk Assessment and Management

- Credit Scoring: Developing models to assess the creditworthiness of individuals and businesses, enabling more accurate lending decisions.

- Fraud Detection: Utilizing machine learning algorithms to identify and prevent fraudulent transactions in real-time.

- Market Risk Analysis: Predicting potential market fluctuations and assessing their impact on investment portfolios.

Customer Analytics

- Personalized Services: Analyzing customer data to offer tailored financial products and services, enhancing customer satisfaction and loyalty.

- Churn Prediction: Identifying customers at risk of leaving and implementing retention strategies based on predictive insights.

- Sentiment Analysis: Gauging customer sentiment through social media and feedback to improve service offerings and address concerns.

Algorithmic Trading

- Automated Trading Strategies: Developing algorithms that execute trades based on market data and predefined criteria, optimizing investment returns.

- High-Frequency Trading (HFT): Leveraging data science to perform rapid trading operations, capitalizing on minute market movements.

- Portfolio Optimization: Using predictive models to balance risk and return, creating optimized investment portfolios tailored to individual preferences.

Regulatory Compliance

- Anti-Money Laundering (AML): Implementing data-driven solutions to detect and prevent money laundering activities.

- RegTech Solutions: Utilizing data science to streamline compliance processes, reducing the burden of regulatory reporting and monitoring.

Examples of Data Science in Finance:

- Fraud Detection Systems: Deploying machine learning models to analyze transaction patterns and flag suspicious activities in real-time.

- Credit Risk Modeling: Using historical data to predict the likelihood of loan defaults, enabling more informed lending decisions.

- Investment Forecasting: Applying predictive analytics to forecast stock prices, enabling investors to make strategic investment choices.

Personal Insight: Data science empowers financial institutions to enhance security, optimize operations, and deliver personalized experiences, making it a cornerstone of modern finance.

Predictive Maintenance in Banking Infrastructure

- System Monitoring: Analyzing data from banking systems to predict and prevent potential failures, ensuring uninterrupted service.

- Resource Allocation: Optimizing the deployment of IT resources based on predictive maintenance models, reducing downtime and operational costs.

Customer Lifetime Value (CLV) Prediction

- Revenue Forecasting: Estimating the total value a customer will bring over their lifetime, enabling targeted marketing and resource allocation.

- Retention Strategies: Identifying high-value customers and implementing strategies to enhance their retention and loyalty.

Data science in finance and banking not only enhances operational efficiencies but also fortifies institutions against emerging risks. By leveraging advanced data techniques, financial entities can achieve greater agility, security, and customer-centricity, ensuring sustained growth and resilience in a competitive market.

Data Science in Social Media and E-commerce

Data science plays a pivotal role in shaping the strategies and operations of social media platforms and e-commerce businesses. By analyzing vast amounts of data generated by users, organizations can enhance user experiences, optimize marketing efforts, and drive sales growth.

Social Media Applications

- Sentiment Analysis: Understanding user sentiments through analysis of posts, comments, and interactions to gauge public opinion and emotional responses.

- Trend Forecasting: Identifying emerging trends and viral content to inform content strategies and engagement initiatives.

- User Behavior Analysis: Studying user interactions and behaviors to personalize content delivery and improve platform engagement.

- Ad Optimization: Leveraging machine learning to optimize ad placements and targeting, maximizing ad performance and ROI.

E-commerce Applications

- Recommendation Systems: Analyzing user behavior and preferences to suggest products, enhancing user experience and increasing sales.

- Customer Segmentation: Dividing customers into distinct groups based on purchasing patterns, demographics, and behaviors to tailor marketing strategies.

- Inventory Management: Using predictive analytics to forecast demand, optimizing inventory levels and reducing costs.

- Pricing Optimization: Implementing dynamic pricing strategies based on market trends, competitor analysis, and customer demand.

Examples of Data Science in Social Media and E-commerce:

- Personalized Recommendations: E-commerce giants like Amazon and Netflix use recommendation systems to suggest products and content, enhancing user satisfaction and loyalty.

- Social Media Marketing: Platforms like Facebook and Instagram use data science to analyze user interactions and optimize ad targeting, improving campaign effectiveness.

- Dynamic Pricing Models: Airlines and ride-sharing services employ data-driven pricing models to adjust fares based on real-time demand and supply conditions.

- Customer Sentiment Tracking: Brands monitor social media sentiment to understand consumer perceptions and adapt their marketing strategies accordingly.

Personal Insight: Data science bridges the gap between user data and strategic decision-making, enabling businesses to create personalized experiences, optimize operations, and drive sustained growth.

Behavioral Analytics

- User Engagement: Analyzing user interactions to identify patterns and preferences, allowing for more engaging and relevant content delivery.

- Conversion Rate Optimization: Studying the customer journey to identify bottlenecks and implement strategies that enhance conversion rates.

Market Basket Analysis

- Purchase Patterns: Identifying items frequently bought together to inform product bundling and cross-selling strategies.

- Inventory Placement: Optimizing product placement on websites to increase visibility and sales based on purchase correlations.

By harnessing the power of data science, social media and e-commerce platforms can deliver highly personalized and efficient experiences to their users. This not only enhances customer satisfaction but also drives business growth through optimized marketing strategies and operational efficiencies.

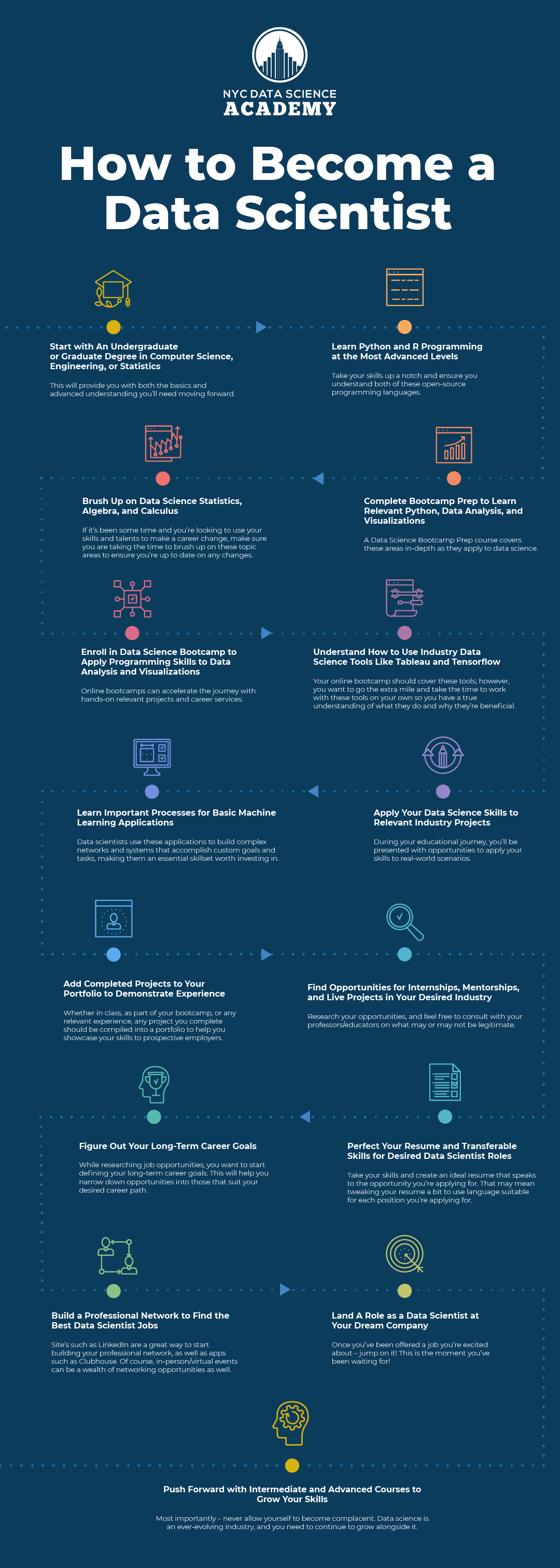

How to Start a Career in Data Science

Embarking on a career in data science involves a combination of acquiring essential skills, gaining practical experience, and building a strong professional network. Here’s a comprehensive guide to help aspiring data scientists navigate their career path successfully.

Learning Path for Aspiring Data Scientists

Starting a career in data science requires a structured learning path that encompasses foundational knowledge, technical skills, and practical experience.

1. Fundamental Skills

- Mathematics and Statistics: A strong understanding of probability, statistics, linear algebra, and calculus is essential for data analysis and model building.

- Programming: Proficiency in programming languages such as Python or R is crucial for data manipulation, analysis, and machine learning.

- Data Manipulation: Skills in handling, cleaning, and transforming data using libraries like Pandas (Python) or dplyr (R).

2. Online Courses and Certifications

- Beginner-Friendly Courses: Enroll in courses that cover the basics of data science, including data analysis, machine learning, and data visualization. Platforms like Coursera, edX, and Udacity offer comprehensive programs.

- Example: IBM Data Science Professional Certificate on Coursera covers data analysis, machine learning, and data visualization using tools like SQL and Python. This certification typically requires around 140 hours to complete.

- Advanced Certifications: For those looking to specialize, consider advanced certifications that delve deeper into specific areas of data science.

- Example: Microsoft Certified: Azure Data Scientist Associate focuses on implementing machine learning models on Azure.

- Example: Professional Certificate in Data Science from Harvard University covers topics such as R programming, machine learning, and big data technologies.

3. Practical Experience

- Projects: Engage in real-world projects that allow you to apply theoretical knowledge. Platforms like Kaggle offer competitions and datasets to enhance your practical skills.

- Internships: Gain hands-on experience through internships in data-driven organizations, providing exposure to industry practices and tools.

- Open Source Contributions: Participate in open-source projects to collaborate with other data scientists and contribute to the community.

4. Building a Portfolio

- Showcase Your Work: Create a portfolio that highlights your projects, demonstrating your problem-solving abilities and expertise in data science.

- GitHub: Use GitHub to upload your code, allowing potential employers to examine your coding style and comprehension.

- Blogging: Write blog posts about your projects to enhance visibility and improve your communication skills, which are essential in data science-related fields.

5. Networking and Continuous Learning

- Join Data Science Communities: Connect with professionals through online forums, LinkedIn groups, and industry events to stay updated on trends and opportunities.

- Continuous Education: Data science is a rapidly evolving field, so continuous learning through courses, webinars, and workshops is vital to keep your skills current.

By following this structured learning path, aspiring data scientists can build a solid foundation, gain practical experience, and position themselves effectively in the competitive job market. Continuous learning and networking further enhance career prospects, ensuring sustained growth and success in the dynamic field of data science.

Top Data Science Certifications and Courses

Certifications and structured courses are invaluable for validating your skills and knowledge in data science. They provide comprehensive training and often include hands-on projects that mirror real-world scenarios. Here are some of the top certifications and courses to consider:

1. IBM Data Science Professional Certificate (Coursera)

- Description: A comprehensive program covering data analysis, machine learning, and data visualization using tools like Python, SQL, and Tableau.

- Duration: Approximately 140 hours.

- Key Modules: Data Science Methodology, Python for Data Science, Data Analysis with Pandas, Machine Learning with Scikit-learn.

- Benefits: Provides a strong foundation in data science, recognized by employers, and includes hands-on projects.

2. Microsoft Certified: Azure Data Scientist Associate

- Description: Focuses on implementing machine learning models on the Azure platform, covering data exploration, feature engineering, and model deployment.

- Exam Required: DP-100: Designing and Implementing a Data Science Solution on Azure.

- Key Modules: Azure Machine Learning, Data Processing, Model Training, Model Deployment.

- Benefits: Validates skills in cloud-based data science, aligns with industry demand for cloud expertise.

3. Professional Certificate in Data Science (Harvard University via edX)

- Description: An in-depth program covering R programming, statistical modeling, machine learning, and big data technologies.

- Duration: Varies, typically several months.

- Key Modules: Data Wrangling, Statistical Inference, Machine Learning, Data Visualization.

- Benefits: Offered by a prestigious institution, covers a wide range of data science topics, includes rigorous coursework.

4. Data Science Specialization (Johns Hopkins University via Coursera)

- Description: A series of courses that cover the entire data science pipeline, from data collection to developing data products.

- Duration: Approximately 11 months.

- Key Modules: R Programming, Regression Models, Practical Machine Learning, Data Product Development.

- Benefits: Comprehensive coverage of data science concepts, hands-on projects, recognized certification.

5. Google Data Analytics Professional Certificate (Coursera)

- Description: Designed for beginners, covering data cleaning, analysis, visualization, and using tools like SQL and R.

- Duration: Approximately 6 months.

- Key Modules: Foundations of Data Analytics, Data Cleaning, Data Visualization with R, Data-Driven Decision Making.

- Benefits: Accessible for beginners, industry-recognized, focused on practical skills and tools.

Choosing the Right Certification:

- Career Goals: Select a certification that aligns with your career aspirations, whether it’s general data science, machine learning, or specialized fields like healthcare analytics.

- Skill Level: Choose certifications that match your current skill level, whether you’re a beginner or an experienced professional looking to upskill.

- Reputation: Opt for certifications from reputable institutions or recognized platforms to ensure credibility and value in the job market.

- Practical Experience: Prioritize programs that offer hands-on projects and real-world applications to enhance your practical skills and portfolio.

Example Pathway:

- Start with foundational courses like the Google Data Analytics Professional Certificate to build basic skills in data analysis and tools.

- Advance to specialized certifications such as the IBM Data Science Professional Certificate to deepen your knowledge in machine learning and data visualization.

- Explore industry-specific certifications like the Microsoft Azure Data Scientist Associate if you aim to work with cloud-based data science solutions.

By strategically selecting certifications and courses that match your career goals and skill level, you can build a robust knowledge base and demonstrate your expertise to potential employers.

Building a Data Science Portfolio and Resume

A well-crafted portfolio and resume are crucial for showcasing your skills and securing a job in the competitive field of data science. These tools highlight your expertise, practical experience, and unique contributions, making you stand out to potential employers.

1. Defining Unique Projects

- Originality: Create projects that go beyond common starter projects like basic Kaggle competitions. Focus on projects that reflect real-world problems and demonstrate your problem-solving abilities.

- Diversity: Include a variety of projects that showcase different aspects of data science, such as data cleaning, exploratory analysis, machine learning, and visualization.

- Narrative Approach: Present each project as a case study, detailing the problem, your approach, tools used, and the outcomes. This storytelling element makes your portfolio more engaging and informative.

2. Creating a GitHub Account

- Code Repository: Upload your project code to GitHub, providing a clear structure and documentation to showcase your coding skills and project methodologies.

- Version Control: Use GitHub to track changes and collaborate on projects, demonstrating your ability to manage code effectively.

- Visibility: A well-maintained GitHub profile serves as a public portfolio, allowing employers to review your code and understand your technical proficiency.

3. Writing Blog Posts

- Content Creation: Write detailed blog posts about your projects, explaining the objectives, techniques, and results. This not only demonstrates your expertise but also improves your communication skills.

- SEO Benefits: Well-written blog posts can enhance your online presence, making it easier for potential employers to discover your work through search engines.

- Engagement: Sharing your insights and challenges faced during projects can engage the data science community and encourage feedback and collaboration.

4. Crafting a Comprehensive Resume

- Highlight Skills: Emphasize relevant skills such as programming languages, data manipulation, machine learning, and data visualization tools.

- Project Showcase: Include a dedicated section for your key projects, detailing your role, the technologies used, and the impact of the project.

- Certifications and Education: List relevant certifications, courses, and academic qualifications that bolster your expertise in data science.

- Professional Experience: Describe your work experience, focusing on data-related roles and accomplishments that demonstrate your ability to apply data science techniques effectively.

5. Tailoring Your Portfolio and Resume

- Customization: Customize your portfolio and resume for each job application, aligning your skills and projects with the specific requirements of the position.

- Keywords: Incorporate relevant keywords from the job description to enhance your resume’s visibility in applicant tracking systems (ATS).

- Achievements: Highlight quantifiable achievements, such as improved model accuracy, increased sales through data-driven strategies, or reduced costs via optimized processes.

Comparing Data Science with Related Fields

Understanding the distinct roles and responsibilities of data science compared to related fields like data analytics and data engineering is essential for defining your career path.

- Data Science vs. Data Analytics:

- Data Science: Focuses on building predictive models and algorithms to uncover hidden patterns and make forecasts.

- Data Analytics: Primarily concerned with interpreting existing data to provide actionable insights for business decisions.

- Data Science vs. Data Engineering:

- Data Science: Emphasizes data analysis, modeling, and interpretation to derive insights.

- Data Engineering: Involves constructing and maintaining data systems and pipelines to prepare data for analysis.

Comparing Data Science with Related Fields

Understanding how data science compares with related disciplines helps in clearly defining your career path and leveraging your skills effectively. Data science intersects with various fields such as data analytics, artificial intelligence, and business intelligence, each with its unique focus and applications.

Data Science vs. Data Analytics: Key Differences

Data science and data analytics are often used interchangeably, but they serve distinct purposes within the broader data ecosystem. Here’s a detailed comparison to highlight their key differences:

In-Depth Comparison:

- Data Science:

- Predictive Modeling: Involves creating models that can predict future events based on historical data.

- Machine Learning: Utilizes algorithms to learn from data and improve over time without explicit programming.

- Big Data Technologies: Employs tools and frameworks to handle and analyze large and complex datasets.

- Data Analytics:

- Descriptive Analysis: Focuses on summarizing historical data to understand what has happened.

- Diagnostic Analysis: Investigates the causes behind past outcomes.

- Visualization: Uses charts and dashboards to present data insights in an easily understandable format.

Conclusion: Data science encompasses a broader and more technical scope, integrating advanced techniques like machine learning and artificial intelligence to predict future outcomes. In contrast, data analytics is more focused on interpreting existing data to provide actionable insights, making it essential for immediate business decision-making.

Data Science vs. Artificial Intelligence: How They Intersect

Data science and artificial intelligence (AI) are closely intertwined, yet they maintain distinct roles within the technology landscape. Understanding their relationship and differences is crucial for leveraging their combined potential effectively.

| Aspect | Data Science | Artificial Intelligence |

|---|---|---|

| Definition | Extracting insights from data using scientific methods | Simulation of human intelligence in machines |

| Core Focus | Data analysis, statistical modeling | Automation, learning, reasoning |

| Techniques | Machine learning, data mining | Neural networks, natural language processing |

| Application Areas | Predictive analytics, data visualization | Robotics, autonomous systems, AI-driven applications |

| Overlap | Uses AI techniques for data analysis and modeling | Uses data science for training and improving models |

| End Goal | Informed decision-making, strategic insights | Intelligent automation, enhancing user experiences |

Interrelationship:

- Data Science Utilizing AI:

- Machine Learning Models: Data science employs machine learning algorithms a subset of AI to analyze and predict data patterns.

- Natural Language Processing (NLP): Data scientists use NLP techniques to interpret and analyze textual data, enhancing data-driven insights.

- AI Leveraging Data Science:

- Training Data: AI systems rely on data science methodologies to preprocess and analyze data necessary for training machine learning models.

- Enhanced Learning: Data science provides the analytical framework to improve AI models, making them more accurate and efficient.

Unique Contributions:

- Data Science:

- Data Exploration: Focuses on understanding data patterns, correlations, and trends.

- Predictive Insights: Builds models that forecast future outcomes and trends.

- Artificial Intelligence:

- Automation: Develops systems that can perform tasks autonomously, mimicking human decision-making.

- Enhanced Capabilities: Creates intelligent systems that can learn, adapt, and improve over time.

Key Takeaways:

- Complementary Fields: Data science and AI complement each other, with data science providing the data-driven foundation for AI models and AI enhancing data science capabilities through advanced learning algorithms.

- Collaborative Innovation: Together, they drive innovations across industries, from healthcare and finance to manufacturing and entertainment.

Example Use Case: AI-Powered Healthcare Diagnostics

In healthcare, data science is used to analyze patient data and develop predictive models, while AI algorithms interpret medical images to assist in diagnostics. This synergy enhances diagnostic accuracy and facilitates early disease detection, improving patient outcomes.

Understanding the interplay between data science and AI allows organizations to harness their combined strengths, driving intelligent solutions and fostering innovation.

Data Science vs. Business Intelligence: Use Cases

Data science and business intelligence (BI) are both pivotal in leveraging data for strategic decision-making, yet they cater to different aspects of data utilization within organizations. Here’s a comparative analysis of their use cases:

| Feature | Data Science | Business Intelligence |

|---|---|---|

| Objective | Predict future trends and behaviors | Analyze historical data to inform decisions |

| Techniques | Machine learning, predictive modeling | Reporting, dashboards, data visualization |

| Data Scope | Large, complex datasets including unstructured data | Structured data from internal sources |

| Outcome | Predictive insights, automated decision-making | Descriptive insights, performance monitoring |

| Users | Data scientists, analysts | Business managers, executives |

| Tools | Python, R, TensorFlow, Scikit-learn | Tableau, Power BI, SQL, Excel |

Use Cases of Data Science:

- Predictive Analytics:

- Use Case: Forecasting customer churn in a subscription-based business.

- Impact: Enables proactive retention strategies, reducing churn rates and increasing customer lifetime value.

- Natural Language Processing (NLP):

- Use Case: Analyzing customer reviews and feedback to gauge sentiment and identify product improvement areas.

- Impact: Enhances product quality and customer satisfaction through data-driven insights.

- Recommendation Engines:

- Use Case: Developing personalized product recommendations for e-commerce platforms.

- Impact: Increases sales and enhances user experience by tailoring suggestions to individual preferences.

Use Cases of Business Intelligence:

- Operational Reporting:

- Use Case: Generating monthly sales reports for different regions and product lines.

- Impact: Provides a clear overview of business performance, facilitating strategic planning and resource allocation.

- Dashboarding:

- Use Case: Creating real-time dashboards to monitor key performance indicators (KPIs) such as sales volume, revenue, and customer acquisition.

- Impact: Enables timely decision-making by providing immediate access to critical business metrics.

- Data Warehousing:

- Use Case: Consolidating data from various departments into a centralized data warehouse for unified reporting and analysis.

- Impact: Enhances data accessibility and consistency, supporting informed business decisions.

Key Differences in Use Cases:

- Data Science:

- Proactive Approach: Focuses on predicting future events and automating decision-making.

- Advanced Techniques: Employs machine learning and statistical models to uncover deep insights.

- Business Intelligence:

- Reactive Approach: Concentrates on interpreting historical data to understand past performance.

- Descriptive Techniques: Utilizes reporting and visualization tools to present data in an actionable format.

Conclusion: While data science and business intelligence both aim to leverage data for strategic advantage, data science focuses on predictive and advanced analytics to forecast future trends, whereas business intelligence centers on descriptive analytics to understand and report on past and current business performance. Both fields are complementary, offering comprehensive data-driven solutions that enhance organizational decision-making and operational efficiency.

Future of Data Science

As data science continues to evolve, its future is shaped by emerging trends, advancements in technology, and the ever-increasing demand for data-driven insights. Understanding these developments is crucial for staying ahead in the dynamic field of data science.

Emerging Trends in Data Science

The landscape of data science is continually evolving, driven by technological innovations and changing business needs. Here are some of the key emerging trends that are shaping the future of data science:

1. Increased Automation

- AI Integration: Artificial intelligence is automating routine data science tasks such as data cleaning, preprocessing, and model selection.

- Data Pipelines: Automated data pipelines streamline the process of data ingestion, transformation, and analysis.

- Productivity Boost: Automation allows data scientists to focus on strategic analysis and innovative problem-solving rather than repetitive workflows.

2. Automated Machine Learning (AutoML)

- Accessibility: AutoML tools democratize data science by enabling non-experts to build machine learning models without extensive programming knowledge.

- Efficiency: AutoML accelerates the model development process by automating hyperparameter tuning, feature selection, and model evaluation.

- Popular Tools: Platforms like Google AutoML, H2O.ai, and DataRobot offer robust AutoML solutions that enhance productivity and model performance.

3. Generative AI

- Data Synthesis: Generative AI creates synthetic data based on existing datasets, crucial for training models in areas with limited or sensitive data.

- Content Generation: Applications include generating realistic images, text, and music, expanding the creative possibilities in various industries.

- Model Enhancement: Generative models like GANs (Generative Adversarial Networks) improve the quality and diversity of training data, enhancing model robustness.

4. Ethical and Responsible AI

- Bias Mitigation: Addressing biases in data and algorithms to ensure fair and unbiased outcomes.

- Regulatory Compliance: Adhering to evolving regulations and standards related to data privacy, security, and ethical AI use.

- Transparency: Developing explainable AI models that provide clear insights into decision-making processes, fostering trust and accountability.

5. Data-Driven Decision-Making

- Strategic Insights: Leveraging predictive analytics and real-time data to inform strategic business decisions.

- Operational Improvements: Enhancing operational processes through continuous data analysis and feedback loops.

- Cross-Functional Integration: Integrating data insights across various business functions, promoting a cohesive and informed organizational approach.

6. Edge Computing

- Real-Time Processing: Performing data analysis and processing at the edge of the network, closer to data sources, reducing latency.

- IoT Integration: Enabling real-time analytics and decision-making in Internet of Things (IoT) devices and applications.

- Scalability: Enhancing the scalability and efficiency of data processing by distributing computing resources geographically.

7. Natural Language Processing (NLP) Advancements

- Text Analysis: Improving the ability to analyze and interpret large volumes of textual data, enhancing applications like chatbots and sentiment analysis.

- Language Models: Developing more sophisticated language models that understand context and nuance, such as GPT-4 and beyond.

- Conversational AI: Enhancing the capabilities of conversational agents to engage in more human-like interactions, improving user experiences.

8. Quantum Computing

- Enhanced Computational Power: Leveraging quantum computing to solve complex data science problems that are currently intractable with classical computers.

- Algorithm Development: Exploring quantum algorithms that can revolutionize machine learning and optimization tasks.

- Research and Development: Fostering innovation in data science through advancements in quantum computing technology.

The Role of AI and Automation in Data Science

Artificial Intelligence (AI) and automation are integral to the advancement of data science, enhancing its capabilities and expanding its applications. The synergy between AI and data science drives significant efficiencies, innovation, and scalability in data-driven projects.

1. Enhancing Data Processing

- Automated Data Cleaning: AI-powered tools automate the identification and correction of data inconsistencies, reducing manual effort and increasing accuracy.

- Data Integration: AI facilitates the seamless integration of data from diverse sources, streamlining the data ingestion process and ensuring comprehensive datasets.

2. Accelerating Machine Learning

- Automated Model Selection: AI algorithms can automatically select the most appropriate machine learning models based on data characteristics and desired outcomes.

- Hyperparameter Optimization: AI-driven techniques expedite the tuning of model hyperparameters, improving model performance without extensive manual intervention.

3. Improving Predictive Accuracy

- Ensemble Methods: AI techniques combine multiple models to enhance predictive accuracy and robustness.

- Deep Learning: Advanced AI models, such as deep neural networks, capture complex patterns and relationships in data, leading to more accurate predictions.

4. Facilitating Real-Time Analytics

- Stream Processing: AI enables the real-time analysis of data streams, providing immediate insights and facilitating instant decision-making.

- Edge AI: Deploying AI models at the edge of the network allows for on-device data processing, reducing latency and enhancing responsiveness.

5. Advancing Natural Language Processing (NLP)

- Text Analysis: AI-driven NLP techniques improve the ability to understand and interpret large volumes of textual data, enhancing applications like sentiment analysis and language translation.

- Conversational Agents: AI-powered chatbots and virtual assistants offer more natural and efficient interactions with users, improving customer service and engagement.

6. Automating Insight Generation

- Automated Reporting: AI tools generate reports and dashboards automatically, saving time and ensuring that stakeholders receive timely updates.

- Insight Discovery: AI algorithms uncover hidden patterns and correlations in data that may not be apparent through manual analysis, providing deeper insights.

The Future Demand for Data Scientists

The demand for data scientists is projected to grow exponentially in the coming years, fueled by the increasing reliance on data-driven decision-making across industries. Understanding the factors driving this demand and the future opportunities is essential for aspiring data scientists.

1. Explosive Data Growth

- Volume: The sheer volume of data generated globally is expanding rapidly, necessitating skilled professionals to manage and analyze it.

- Variety: Diverse data types, including structured, unstructured, and semi-structured data, require versatile analytical skills.

- Velocity: The speed at which data is generated and needs to be processed demands efficient data handling and real-time analytics.

2. Industry Adoption

- Healthcare: From predictive diagnostics to personalized medicine, data science is revolutionizing patient care and healthcare operations.

- Finance: Enhancing fraud detection, risk management, and personalized financial services through advanced data analysis.

- Retail and E-commerce: Optimizing inventory, personalizing customer experiences, and driving sales through data-driven insights.

- Technology and IT: Developing smarter technologies and enhancing cybersecurity through data science applications.

3. Technological Advancements

- AI and Machine Learning: Continued advancements in AI and ML technologies are expanding the scope and capabilities of data science.

- Big Data Technologies: Innovations in big data tools and platforms enable the processing and analysis of massive datasets efficiently.

- Cloud Computing: The proliferation of cloud-based data services provides scalable and flexible data storage and processing solutions.

4. Competitive Advantage

- Strategic Insights: Organizations leverage data scientists to gain strategic insights that drive business growth and innovation.

- Operational Efficiency: Data-driven optimizations lead to cost savings and enhanced operational performance.

- Innovation Catalyst: Data scientists contribute to the development of new products, services, and business models through innovative data applications.

5. Job Market Projections

- Employment Growth: By 2026, the demand for data scientists is expected to grow by as much as 200%, reflecting the increasing importance of data science in driving business strategies and operations.

- Global Market Size: The global data science market is anticipated to reach approximately USD 322.9 billion by 2025, with a compound annual growth rate (CAGR) of 27.7%.

- Job Creation: Around 11.5 million new jobs in data science are projected to be created, particularly in roles that combine data analytics with artificial intelligence and machine learning

Frequently Asked Questions (FAQs)

1. What skills are essential for a data scientist?

Essential skills for a data scientist include proficiency in programming languages like Python and R, strong knowledge of statistics and mathematics, experience with data manipulation and cleaning, expertise in machine learning algorithms, data visualization skills, and familiarity with data storage and processing tools like SQL and Hadoop.

2. How does data science differ from data analytics?

Data science encompasses a broader scope, including predictive modeling, machine learning, and advanced statistical analysis to forecast future trends. Data analytics focuses more on interpreting existing data to provide actionable insights for immediate decision-making and improving business operations.

3. What industries benefit the most from data science?

Industries such as healthcare, finance, retail, e-commerce, marketing, technology, and social media benefit significantly from data science. These sectors leverage data science for improving customer experiences, optimizing operations, enhancing risk management, and driving innovation.

4. What are the best programming languages for data science?

The best programming languages for data science are Python and R due to their extensive libraries and community support. Python is favored for its versatility and ease of use, while R is preferred for its statistical analysis and data visualization capabilities. SQL is also essential for managing and querying databases.

5. How can I build a strong data science portfolio?

To build a strong data science portfolio, include a variety of unique projects that showcase your skills in data manipulation, analysis, machine learning, and visualization. Use platforms like GitHub to host your code, write detailed blog posts about your projects, and demonstrate your ability to solve real-world problems through case studies and comprehensive documentation.

Key Takeaways

Comprehensive Understanding: Data science integrates statistics, computer science, and domain expertise to extract valuable insights from data.

Essential Components: Key stages include data collection, cleaning, analysis, modeling, and visualization.

Diverse Applications: Data science impacts various industries such as healthcare, finance, marketing, and e-commerce by enhancing decision-making and operational efficiency.

Critical Skills and Tools: Proficiency in programming languages (Python, R), data manipulation libraries (Pandas, NumPy), machine learning frameworks (Scikit-learn, TensorFlow), and visualization tools (Matplotlib, Seaborn) is essential.

Growing Demand: The demand for data scientists is projected to grow rapidly, driven by the increasing reliance on data-driven strategies across industries.

Future Trends: Emerging trends include increased automation, generative AI, ethical AI practices, and advancements in quantum computing, shaping the future landscape of data science.

Career Pathways: Building a successful career involves acquiring foundational knowledge, gaining practical experience, obtaining relevant certifications, and creating a robust portfolio.

Interdisciplinary Nature: Understanding the distinctions and intersections between data science, data analytics, artificial intelligence, and business intelligence is crucial for specialized career paths.

Ethical Considerations: Responsible use of data and ethical AI practices are paramount to ensure fairness, transparency, and compliance with regulations.

Conclusion

Data science is a transformative force, reshaping industries by unlocking the power of data to drive informed decision-making, innovation, and operational excellence. This comprehensive guide has explored the multifaceted world of data science, delving into its core concepts, essential tools, diverse applications, and the pathways to building a successful career.